The third in our series of tutorials on Simple Neural Networks. This time, we’re looking a bit deeper into the maths, specifically focusing on vectorisation. This is an important step before we can translate our maths in a functioning script in Python.

So we’ve been through the maths of a neural network (NN) using back propagation and taken a look at the different activation functions that we could implement. This post will translate the mathematics into Python which we can piece together at the end into a functioning NN!

Forward Propagation

Let’s remimnd ourselves of our notation from our 2 layer network in the maths tutorial:

- I is our input layer

- J is our hidden layer

- $w_{ij}$ is the weight connecting the $i^{\text{th}}$ node in in $I$ to the $j^{\text{th}}$ node in $J$

- $x_{j}$ is the total input to the $j^{\text{th}}$ node in $J$

So, assuming that we have three features (nodes) in the input layer, the input to the first node in the hidden layer is given by:

Lets generalise this for any connected nodes in any layer: the input to node $j$ in layer $l$ is:

But we need to be careful and remember to put in our bias term $\theta$. In our maths tutorial, we said that the bias term was always equal to 1; now we can try to understand why.

We could just add the bias term onto the end of the previous equation to get:

If we think more carefully about this, what we are really saying is that “an extra node in the previous layer, which always outputs the value 1, is connected to the node $j$ in the current layer by some weight $w_{4j}$“. i.e. $1 \cdot w_{4j}$:

By the magic of matrix multiplication, we should be able to convince ourselves that:

Now, lets be a little more explicit, consider the input $x$ to the first two nodes of the layer $J$:

Note that the second matrix is constant between the input calculations as it is only the output values of the previous layer (including the bias term). This means (again by the magic of matrix multiplication) that we can construct a single vector containing the input values $x$ to the current layer:

This is an $\left(n \times m+1 \right)$ matrix multiplied with an $\left(m +1 \times 1 \right)$ where:

- $n$ is the number of nodes in the current layer $l$

- $m$ is the number of nodes in the previous layer $l-1$

Lets generalise - the vector of inputs to the $n$ nodes in the current layer from the nodes $m$ in the previous layer is:

or:

In this notation, the output from the current layer $J$ is easily written as:

Where $\sigma$ is the activation or transfer function chosen for this layer which is applied elementwise to the product of the matrices.

This notation allows us to very efficiently calculate the output of a layer which reduces computation time. Additionally, we are now able to extend this efficiency by making out network consider all of our input examples at once.

Remember that our network requires training (many epochs of forward propagation followed by back propagation) and as such needs training data (preferably a lot of it!). Rather than consider each training example individually, we vectorise each example into a large matrix of inputs.

Our weights $\mathbf{W_{IJ}}$ connecting the layer $l$ to layer $J$ are the same no matter which input example we put into the network: this is fundamental as we expect that the network would act the same way for similar inputs i.e. we expect the same neurons (nodes) to fire based on the similar features in the input.

If 2 input examples gave the outputs $ \mathbf{\vec{\mathcal{O}}_{I_{1}}} $ and $ \mathbf{\vec{\mathcal{O}}_{I_{2}}} $ from the nodes in layer $I$ to a layer $J$ then the outputs from layer $J$ , $\mathbf{\vec{\mathcal{O}}_{J_{1}}}$ and $\mathbf{\vec{\mathcal{O}}_{J_{1}}}$ can be written:

For the $m$ nodes in the input layer. Which may look hideous, but the point is that all of the training examples that are input to the network can be dealt with simultaneously because each example becomes another column in the input vector and a corresponding column in the output vector.

Back Propagation

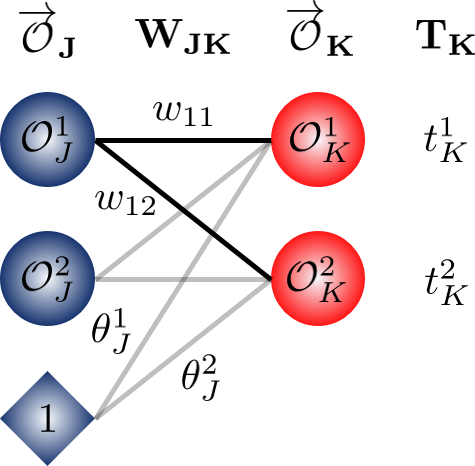

To perform back propagation there are a couple of things that we need to vectorise. The first is the error on the weights when we compare the output of the network $\mathbf{\vec{\mathcal{O}}_{K}}$ with the known target values:

A reminder of the formulae:

Where $\delta_{k}$ is the error on the weights to the output layer and $\delta_{j}$ is the error on the weights to the hidden layers. We also need to vectorise the update formulae for the weights and bias:

Vectorising the Output Layer Deltas

Lets look at the output layer delta: we need a subtraction between the outputs and the target which is multiplied by the derivative of the transfer function (sigmoid). Well, the subtraction between two matrices is straight forward:

but we need to consider the derivative. Remember that the output of the final layer is:

and the derivative can be written:

Note: This is the derivative of the sigmoid as evaluated at each of the nodes in the layer $K$. It is acting elementwise on the inputs to layer $K$. Thus it is a column vector with the same length as the number of nodes in layer $K$.

Put the derivative and subtraction terms together and we get:

Again, the derivatives are being multiplied elementwise with the results of the subtration. Now we have a vector of deltas for the output layer $K$! Things aren’t so straight forward for the detlas in the hidden layers.

Lets visualise what we’ve seen:

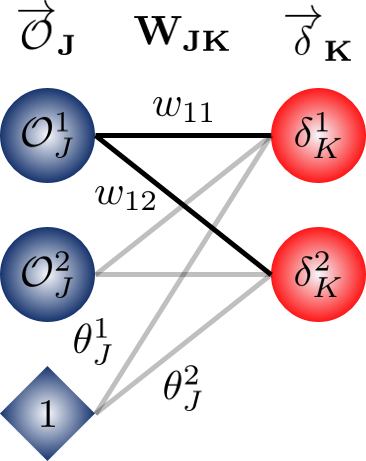

Vectorising the Hidden Layer Deltas

We need to vectorise:

Let’s deal with the summation. We’re multipying each of the deltas $\delta_{k}$ in the output layer (or more generally, the subsequent layer could be another hidden layer) by the weight $w_{jk}$ that pulls them back to the node $j$ in the current layer before adding the results. For the first node in the hidden layer:

Notice the weights? They pull the delta from each output layer node back to the first node of the hidden layer. In forward propagation, these we consider multiple nodes going out to a single node, rather than this way of receiving multiple nodes at a single node.

Combine this summation with the multiplication by the activation function derivative:

remembering that the input to the $\text{1}^\text{st}$ node in the layer $J$

What about the $\text{2}^\text{nd}$ node in the hidden layer?

This is looking familiar, hopefully we can be confident based upon what we’ve done before to say that:

We’ve seen a version of this weights matrix before when we did the forward propagation vectorisation. In this case though, look carefully - as we mentioned, the weights are not in the same places, in fact, the weight matrix has been transposed from the one we used in forward propagation. This makes sense because we’re going backwards through the network now! This is useful because it means there is very little extra calculation needed here - the matrix we need is already available from the forward pass, but just needs transposing. We can call the weights in back propagation here $ \mathbf{ W_{KJ}} $ as we’re pulling the deltas from $K$ to $J$.

Lets visualise what we’ve seen:

Vectorising the Update Equations

Finally, now that we have the vectorised equations for the deltas (which required us to get the vectorised equations for the forward pass) we’re ready to get the update equations in vector form. Let’s recall the update equations

Ignoring the $-\eta$ for now, we need to get a vector form for $\delta_{l} \ \mathcal{O}_{l-1}$ in order to get the update to the weights. We have the matrix of weights:

Suppose we are updating the weight $w_{21}$ in the matrix. We’re looking to find the product of the output from the second node in $J$ with the delta from the first node in $K$.

Considering this example, we can write the matrix for the weight updates as:

Generalising this into vector notation and including the learning rate $\eta$, the update for the weights in layer $J$ is:

Similarly, we have the update to the bias term. If:

So the bias term is updated just by taking the deltas straight from the nodes in the subsequent layer (with the negative factor of learning rate).

With $*$ representing an elementwise multiplication between the matrices.

What's next?

Although this kinds of mathematics can be tedious and sometimes hard to follow (and probably with numerous notation mistakes… please let me know if you find them!), it is necessary in order to write a quick, efficient NN. Our next step is to implement this setup in Python.

comments powered by Disqus